Summarize this content to 2000 words in 6 paragraphs

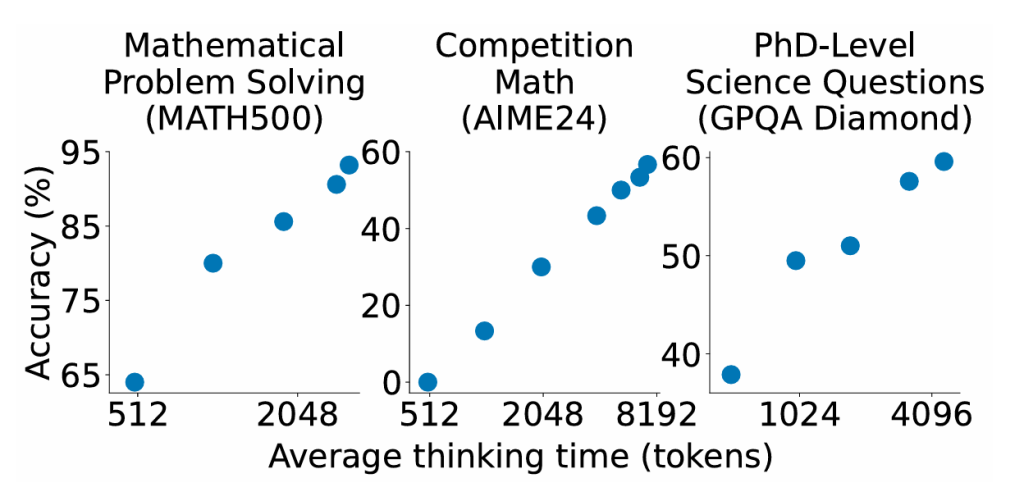

A chart from the s1 research paper shows accuracy improving as thinking time goes up.

In a new demonstration of the potential to improve artificial intelligence without breaking the bank, researchers from the University of Washington, Seattle’s Allen Institute for AI (Ai2), and Stanford University have developed a technique that makes AI models “think” longer before answering.

The idea is to boost AI reasoning abilities while significantly reducing training costs.

The method of simple “test-time scaling,” described in a recent research paper, uses a simple technique that forces the AI to continue processing if it starts to respond to a query too early. The researchers found that this technique helps the model review and improve its answer, often avoiding mistakes in the output.

The model, data, and code for the model, dubbed s1, are open-sourced on GitHub.

It’s part of a broader trend, exemplified by DeepSeek, to find efficient new ways of improving AI performance while reducing the costs of AI model training. The computing resources described in the paper for training the s1 reasoning model would amount to less than $50 in cloud computing credits, TechCrunch reports.

Researchers listed on the paper are: Niklas Muennighoff (Stanford, Ai2, Contextual AI), Zitong Yang (Stanford), Weijia Shi (UW), Xiang Lisa Li (Stanford), Fei-Fei Li (Stanford), Hannaneh Hajishirzi (UW, Ai2), Luke Zettlemoyer (UW), Percy Liang (Stanford), Emmanuel Candès (Stanford), and Tatsunori Hashimoto (Stanford).

PREVIOUSLY: Allen Institute for AI challenges DeepSeek on key benchmarks with big new open-source AI model