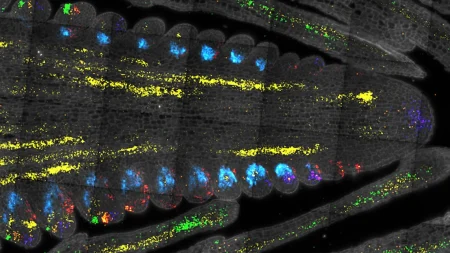

The rise of large language models (LLMs) in the tech world comes with significant costs, such as legal fees for access to training data, computational power costs, and energy consumption. However, smaller researchers and individuals may not have access to such resources, making it difficult to create their own LLMs for specialized tasks. In response to this challenge, researchers at Washington University in St. Louis have developed a cost-effective solution in the form of an autonomous agent that instructs the reasoning process of LLMs. This agent generates step-by-step instructions for tasks and has been shown to significantly improve the reasoning process of various LLMs.

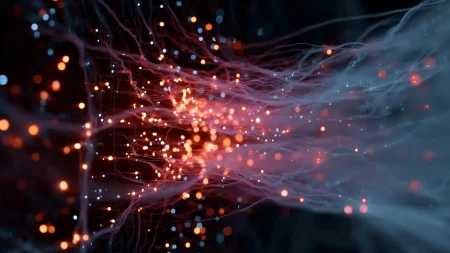

The autonomous agent developed by the researchers at WashU serves as a tool to think over instructions from the web. By providing basic task information and input examples, the agent produces high-quality instructions that can guide the reasoning of smaller LLMs on specific tasks. This method is more affordable as it only requires the use of the large LLM once per dataset, with instructions then handed over to a smaller LLM to take over the task. This approach, known as Zero-Shot AgentInstruct, has been proven to boost the performance of state-of-the-art LLMs by a significant margin, particularly in tasks related to math and logic.

The researchers tested their cost-effective method on language processing tasks and compared its performance to zero-shot prompting methods using various LLMs. Zero-Shot AgentInstruct outperformed other methods across a variety of tasks evaluated on 29 datasets. The improvement in thinking and reasoning capabilities, especially in math and logic tasks, was deemed striking by the researchers. The approach of using powerful LLMs to distill tasks into step-by-step reasoning paths for smaller models is akin to an experienced teacher sharing their knowledge with students, enabling smaller models to enhance their reasoning capabilities without the need for additional training.

The development of this cost-effective solution addresses the need for a more accessible and affordable version of LLMs for the masses. By utilizing a large LLM to generate instructions that guide the reasoning process of smaller models, researchers at WashU have demonstrated the potential for significant performance improvements across a range of tasks. This approach represents a more practical and economical way to leverage the capabilities of LLMs for specialized tasks, making it accessible to researchers and individuals who may not have the resources to build their own large language models.

The collaboration between researchers at Washington University in St. Louis and the University of California, Berkeley has led to the creation of an innovative solution for enhancing the reasoning abilities of LLMs. The development of an autonomous agent that provides step-by-step instructions for tasks has shown promising results in improving the performance of various LLMs. By leveraging the capabilities of large models to guide the thinking process of smaller models, researchers have demonstrated the potential for significant advancements in the field of generative AI, particularly in tasks related to math and logic.

In conclusion, the cost-effective method developed by researchers at WashU offers a practical solution for improving the reasoning capabilities of LLMs without the need for extensive training. By using a large LLM to generate instructions for smaller models, researchers have demonstrated a more affordable and accessible approach to enhancing the performance of LLMs on specialized tasks. The development of the autonomous agent represents a significant advancement in the field of generative AI and has the potential to benefit researchers, educators, and individuals seeking to leverage the power of language models for a wide range of applications.