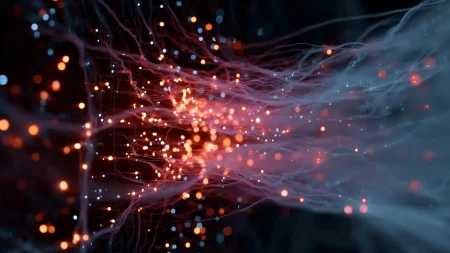

Robots trained to perform specific tasks with a limited amount of task-specific data often struggle to adapt to new tasks in unfamiliar environments. To address this challenge, MIT researchers have developed a new technique called Policy Composition (PoCo) that combines multiple sources of data across domains, modalities, and tasks using diffusion models, a type of generative AI. By training separate diffusion models to learn strategies for completing individual tasks, researchers can then combine these policies into a general policy that enables robots to perform multiple tasks in various settings. In simulations and real-world experiments, this approach led to a 20% improvement in task performance compared to baseline methods.

The team, led by MIT graduate student Lirui Wang, trained diffusion models using various datasets, such as human video demonstrations and teleoperation data. They then combined the policies learned by these models, refining the output iteratively to satisfy the objectives of each individual policy. By using this approach, researchers were able to leverage the strengths of different policies, such as achieving more dexterity with real-world data and better generalization with simulation data. This merging of policies allowed for improved performance in tool-use tasks such as hammering a nail or flipping an object with a spatula.

The Diffusion Policy technique, introduced by researchers at MIT, Columbia University, and the Toyota Research Institute, forms the basis for PoCo. By teaching a diffusion model to generate trajectories for robots through noise refinement, researchers were able to generalize robot policies across multiple tasks and environments. This method enables the combination of policies from various datasets, providing a flexible framework for adapting to new tasks or adding data from different modalities and domains without starting the training process from scratch.

Looking ahead, the MIT team aims to apply PoCo to long-horizon tasks that involve multiple tools and improve performance by incorporating larger robotics datasets. In the quest to succeed in robotics, researchers believe that a combination of internet data, simulation data, and real robot data will be essential. PoCo represents a significant step in this direction, providing a solid foundation for future developments in robotic training and performance improvement. By effectively leveraging heterogeneous data sources, researchers hope to enable robots to learn and adapt to a wide range of tasks and environments.

The study’s coauthors include graduate students Jialiang Zhao and Yilun Du, as well as professors Edward Adelson and Russ Tedrake. Their research, funded by organizations such as Amazon, the Singapore Defense Science and Technology Agency, the U.S. National Science Foundation, and the Toyota Research Institute, will be presented at the Robotics: Science and Systems Conference. The interdisciplinary team’s innovative approach to combining disparate datasets and training policies for multipurpose robots showcases a promising direction for advancing the field of robotics and AI. Through the use of diffusion models and generative AI, researchers have demonstrated a more effective way to train robots to perform various tasks, adapt to new environments, and achieve improved task performance.