Researchers have long been studying the energy costs associated with computing systems, whether biological or synthetic. This energy cost is related to the work required to run a program and the heat dissipated in the process. Previous research on the energy cost of computation has focused on basic symbolic computations that are not easily transferable to real-world scenarios. In a recent paper published in Physical Review X, a group of physicists and computer scientists have expanded the modern theory of the thermodynamics of computation by introducing mathematical equations that reveal the minimum and maximum predicted energy cost of computational processes that depend on randomness.

The new framework developed by the researchers offers insights into computing energy-cost bounds on processes with unpredictable outcomes. For example, in a scenario where a coin-flipping simulator stops after achieving 10 heads or a biological cell stops producing a protein after eliciting a certain reaction from another cell, the time required to achieve the goal can vary. The framework provides a way to calculate lower bounds on the energy cost of such situations. The study, conducted by researchers including SFI Professor David Wolpert and SFI graduate fellow Gülce Kardes, uncovers a way to lower-bound the energetic costs of arbitrary computational processes.

Wolpert and his colleagues have developed the idea of a “mismatch cost,” which measures how much the cost of a computation exceeds Landauer’s bound. This bound, proposed by physicist Rolf Landauer in 1961, defines the minimum amount of heat required to change information in a computer. Knowing the mismatch cost could inform strategies for reducing the overall energy cost of a system. By extending tools from stochastic thermodynamics to the calculation of a mismatch cost for common computational problems, the researchers have opened up new avenues for finding the lowest energy needed for computation in any system, regardless of its implementation.

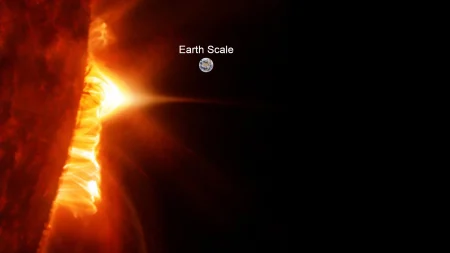

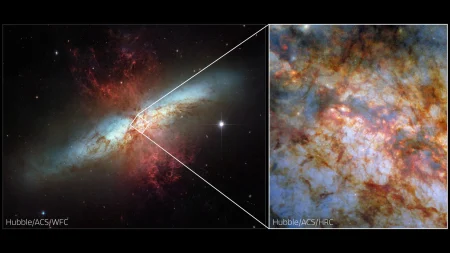

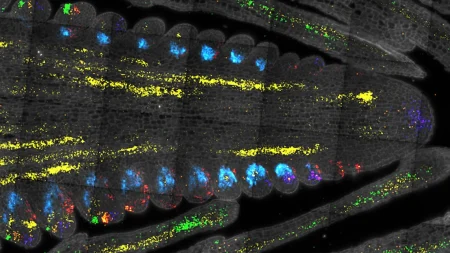

The research conducted by the team highlights a new approach to identifying ways to make computing more energy efficient. As computers currently use between 5% and 9% of global generated power, with estimates suggesting this could reach 20% by 2030, it is crucial to find ways to reduce energy consumption. Modern computers are considered grossly inefficient compared to biological systems, which are about 100,000 times more energy-efficient. By developing a general thermodynamic theory of computation, researchers aim to discover new strategies to reduce energy consumption in real-world machines.

One potential practical application of this research is in the development of more energy-efficient computer chip architectures. Understanding how algorithms and devices use energy to perform tasks could lead to the creation of physical chips that can carry out computations using less energy. This insight into energy-efficient computing could provide a new direction for addressing the increasing energy demands of computers and finding ways to make computing systems more sustainable in the future. By shedding light on these issues, the researchers hope to pave the way for more efficient and environmentally friendly computing technologies.