China recently decided not to sign onto an international “blueprint” aimed at establishing guidelines for the military use of artificial intelligence (AI). The Responsible Artificial Intelligence in the Military Domain (REAIM) summit, attended by over 90 nations, saw some 30 countries, including China, choose not to support the nonbinding proposal. While this decision is not necessarily cause for concern, AI expert Arthur Herman believes that China’s opposition likely stems from its reluctance to sign multilateral agreements that could potentially constrain its ability to use AI to enhance its military edge.

The summit and the blueprint aim to ensure that there is always “human control” over AI systems, especially in military and defense contexts. Nations like the U.S., at the forefront of AI development, emphasize the importance of maintaining a human element in serious battlefield decisions to prevent mistaken casualties and avoid machine-driven conflicts. The speed at which AI operates is crucial on the battlefield, but ultimately, decisions involving human lives should be made by human beings rather than AI-driven systems.

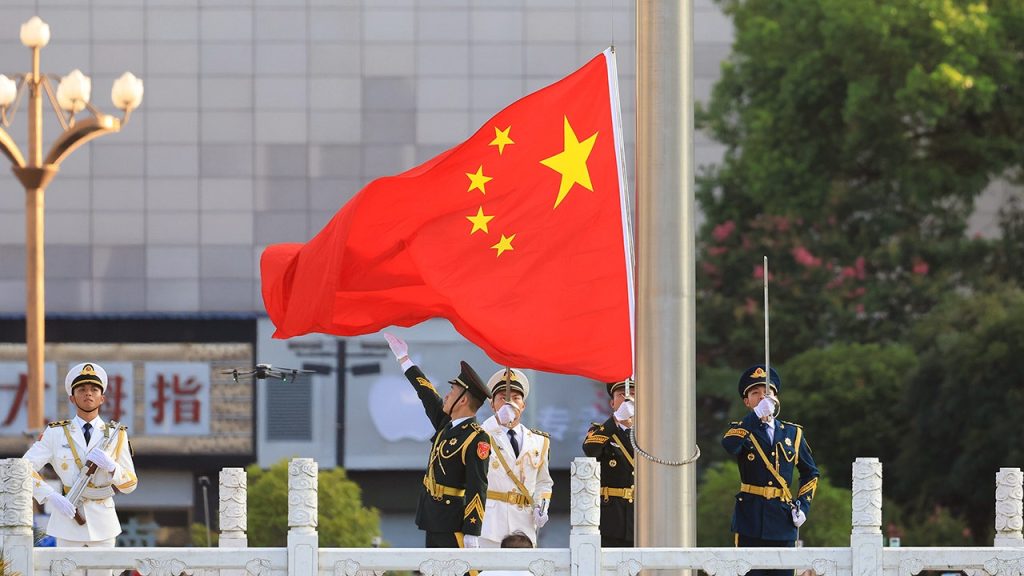

Co-hosted by the Netherlands, Singapore, Kenya, and the United Kingdom, the REAIM summit was the second of its kind after a similar meeting last year that China had backed. However, this year China, along with 30 other countries, did not agree to the building blocks for AI safeguards. Chinese Foreign Ministry spokesperson Mao Ning mentioned China’s principles of AI governance and highlighted President Xi Jinping’s “Global Initiative for AI Governance.” While China did not support the blueprint introduced during the summit, Mao stated that China is open to working with other parties constructively to deliver more through AI development.

Despite efforts to establish multilateral agreements to safeguard AI practices in military use, experts like Herman believe that these agreements are unlikely to deter adversarial nations like China, Russia, and Iran from developing harmful AI technologies. Herman suggested that deterrence, rather than reliance on ethical standards, is more effective in restraining countries determined to push ahead with AI-driven weapons. By making it clear that the development of such weapons could lead to retaliation, these nations may be more careful in their AI advancements. The absence of China and other countries from the recent summit’s agreement raises questions about the effectiveness of multilateral efforts in regulating AI in military applications.