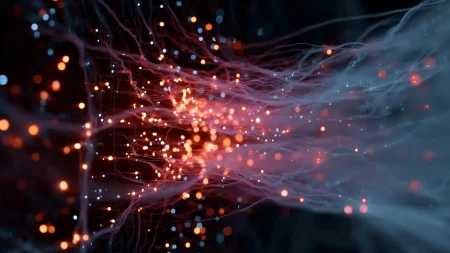

Large language models have been shown to have impressive capabilities, such as generating poetry and computer programs, even though they are trained to predict words that come next in a piece of text. However, a recent study found that a popular type of generative AI model can provide turn-by-turn driving directions in New York City with near-perfect accuracy without having formed an accurate internal map of the city. The researchers discovered that when some streets were closed and detours were added, the model’s performance suffered, suggesting that the New York maps the model was generating had many nonexistent streets connecting far away intersections. This finding has implications for the use of generative AI models in the real world, as they may break down if the task or environment changes slightly.

The study, led by Ashesh Rambachan, a professor at MIT, in collaboration with researchers from Harvard University and Cornell University, introduced new metrics to test whether a large language model (LLM) has formed an accurate world model. These metrics were applied to a class of problems known as deterministic finite automations (DFAs), which involve a sequence of states and rules that must be followed. The researchers found that a transformer, the type of generative AI model commonly used in LLMs, can predict valid moves in a game of Connect 4 without understanding any of the rules. By developing the sequence distinction and sequence compression metrics, the researchers were able to evaluate the coherence of the world model formed by the transformers.

The study revealed that transformers trained on randomly produced data tended to form more accurate world models compared to those trained on data generated by following strategies. While the transformers were able to generate accurate directions and valid moves in Othello, the new metrics showed that only one of the transformers formed a coherent world model for Othello moves, and none performed well in the wayfinding example. Adding detours to the map of New York City caused all navigation models to fail, indicating that the transformers were not able to adapt to changes in the environment. The researchers identified several issues in the city maps generated by the models, such as random flyovers above streets and impossible orientations of multiple streets.

The results of the study highlight the capability of transformers to perform well at specific tasks without a deep understanding of the underlying rules. To build large language models that can capture accurate world models, a different approach may be required. The researchers emphasize the need to carefully consider whether these models truly understand the world or if their performance is based on other factors. Future research aims to address a broader set of problems and apply the evaluation metrics to real-world scientific challenges. The work was funded by various institutions, including the Harvard Data Science Initiative, the National Science Foundation, and the MacArthur Foundation.

The researchers’ findings raise questions about the extent to which large language models truly understand the world around them. Despite their impressive performance in certain tasks, it is clear that these models can struggle when faced with changes or variations in the environment. The development of new metrics to evaluate the coherence of world models generated by transformers represents an important step towards ensuring the reliability and robustness of generative AI models. By exploring a diverse set of problems and applying these metrics to real-world scenarios, researchers can gain a better understanding of the limitations and potential of large language models in various applications.