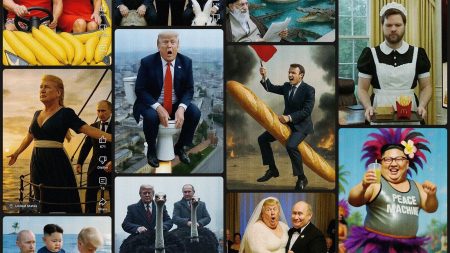

Over 600 hackers gathered at a recent event hosted by Gray Swan AI to compete in a “jailbreaking arena,” attempting to manipulate popular AI models into generating illicit content, such as bomb-making instructions or fake news about climate change. Gray Swan, a startup focused on AI safety, has gained traction in the industry with partnerships with OpenAI, Anthropic, and the UK’s AI Safety Institute.

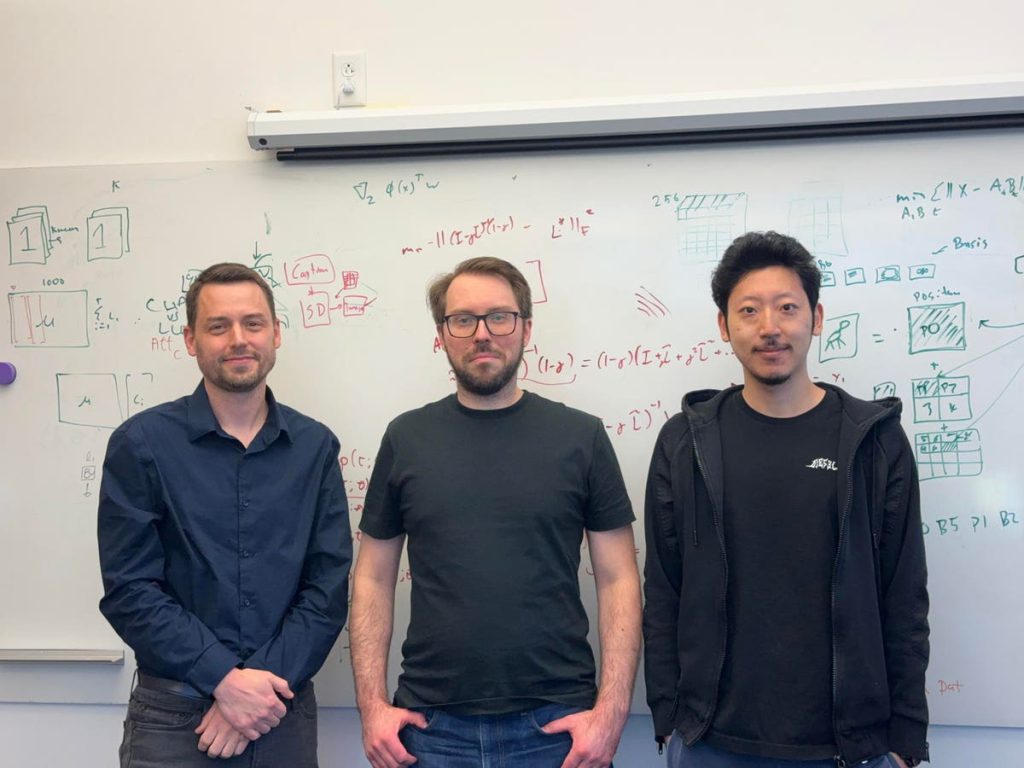

Founded by a group of computer scientists from Carnegie Mellon University, Gray Swan is dedicated to identifying and addressing risks associated with AI. The team has developed innovative security measures, such as circuit breakers, to protect models from malicious prompts that could lead to harmful outputs. These circuit breakers disrupt the model’s reasoning when exposed to objectionable content, preventing it from functioning properly.

Despite the challenges posed by evolving AI technology, Gray Swan has made significant progress in defending models against jailbreaking attempts. The team’s proprietary model, Cygnet, stood strong against hacking efforts at the recent event, showcasing the effectiveness of circuit breakers in safeguarding AI systems. Gray Swan has also developed a software tool called “Shade” to identify vulnerabilities in AI models and stress test their capabilities.

With $5.5 million in seed funding and plans for a Series A funding round, Gray Swan is focused on building a community of hackers to test and improve AI security measures. Red teaming events, like the one hosted by Gray Swan, have become an essential part of assessing AI models for potential vulnerabilities, with companies like OpenAI and Anthropic also implementing bug bounty programs.

Independent security researchers, such as Ophira Horwitz and Micha Nowak, have played a critical role in exposing flaws in AI models and helping developers strengthen their defenses. Horwitz and Nowak successfully bypassed Cygnet’s security measures in the recent competition, prompting Gray Swan to announce a new challenge featuring OpenAI’s o1 model. Both researchers received cash rewards and were hired as consultants by Gray Swan.

Gray Swan emphasizes the importance of human red teaming events in enhancing AI systems’ ability to respond to real-world scenarios. By continuously testing and refining security measures, the team aims to stay ahead of potential threats and ensure that AI models are deployed safely. As the field of AI security continues to evolve, initiatives like Gray Swan’s jailbreaking events are essential for advancing the industry and protecting against emerging risks.