In the aviation industry, where pilot performance under stress can be a matter of life or death, comprehensive training is essential. Augmented reality (AR) systems are commonly used to guide pilots through various scenarios, but their effectiveness is enhanced when tailored to the individual’s mental state. HuBar is a visual analytics tool developed by a research team from NYU Tandon School of Engineering that focuses on analyzing performer behavior and cognitive workload during AR-assisted training sessions, such as simulated flights. By providing deep insights into pilot behavior and mental states, HuBar helps researchers and trainers identify patterns, areas of difficulty, and optimize training programs for improved learning outcomes and real-world performance.

While initially designed for pilot training, HuBar has potential applications beyond aviation. Claudio Silva, the lead researcher from NYU, states that HuBar’s comprehensive analysis of data from AR-assisted tasks can lead to improved performance in various complex scenarios, such as surgery, military operations, and industrial tasks. The system’s ability to visualize diverse data and analyze performer behavior can enhance training in a range of fields, offering insights that can lead to better learning outcomes and performance across different scenarios.

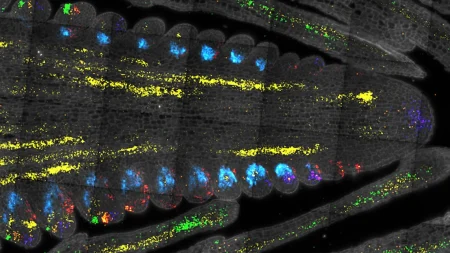

The research team at NYU introduced HuBar in a study focusing on aviation, analyzing data from multiple helicopter co-pilots in an AR-flying simulation. By comparing the performance of two pilot subjects, HuBar revealed significant differences in their attention states and error rates. The system’s detailed analysis, which includes video footage, identified areas where the underperforming pilot struggled due to task familiarity issues. HuBar’s unique ability to analyze non-linear tasks and integrate complex data streams allows trainers to pinpoint specific areas of difficulty and improve AR-assisted training programs for better outcomes.

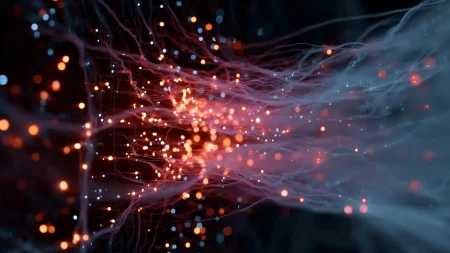

HuBar’s interactive visualization system facilitates comparison across different sessions and performers, enabling researchers to identify patterns and anomalies in complex, non-sequential procedures. By integrating data on brain activity, body movements, gaze tracking, task procedures, errors, and mental workload classifications, HuBar provides a holistic analysis of performer behavior during AR-assisted tasks. This comprehensive approach allows trainers to understand correlations between cognitive states, physical actions, and task performance, leading to tailored assistance systems that meet individual user needs.

Sonia Castelo, lead author of the HuBar paper and a VIDA Research Engineer at NYU, highlights the system’s ability to provide detailed insights into a person’s mental and physical state during a task, allowing for tailored assistance systems that adapt to the user’s needs in real-time. As AR systems continue to advance and become more prevalent, tools like HuBar will be crucial for understanding how these technologies impact human performance and cognitive load. Joao Rulff, a Ph.D. student at VIDA, emphasizes that HuBar is aiding in the development of the next generation of AR training systems, which could adapt based on the user’s mental state across diverse applications and complex task structures.

HuBar is part of a research initiative supported by a $5 million DARPA contract under the Perceptually-enabled Task Guidance (PTG) program, aimed at developing AI technologies to assist individuals in performing complex tasks efficiently. By analyzing pilot data from Northrop Grumman Corporation as part of the DARPA PTG program, the NYU research team is working towards enhancing human performance and reducing errors through tailored assistance systems built on deep insights into performer behavior and cognitive workload.