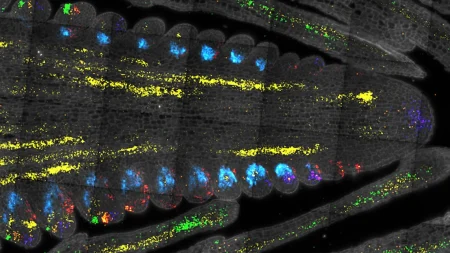

A new method developed by MIT engineers, called Clio, allows robots to make intuitive, task-relevant decisions. This approach enables a robot to identify the parts of a scene that are important based on the tasks at hand. By taking in a list of tasks described in natural language, the robot determines the level of granularity required to interpret its surroundings and remembers only the relevant parts of the scene. In various experiments conducted by the team, including cluttered cubicles and an office building, Clio was used to automatically segment a scene at different levels of granularity based on specified tasks such as moving a rack of magazines or retrieving a first aid kit.

By using Clio, robots can identify and remember only the elements necessary for a given task. This ability is beneficial in many environments and scenarios, such as search and rescue missions, domestic settings, or factories where robots work alongside humans. The researchers envision Clio being used in situations where a robot needs to quickly survey and understand its surroundings within the context of a specific task. The method allows the robot to focus on relevant parts of the scene while ignoring irrelevant objects, improving its ability to interact and complete tasks efficiently.

Advancements in computer vision and natural language processing have enabled robots to recognize objects in their surroundings, even in more realistic settings. Researchers have taken an open-set approach, utilizing deep-learning tools to build neural networks that can process billions of images and associated text to identify elements in a scene. However, a challenge remains in parsing a scene in a way that is relevant to a specific task. Typical methods determine a fixed level of granularity for fusing segments of a scene, which may not be useful for the robot’s tasks. With Clio, the MIT team aims to address this challenge by automatically tuning the level of granularity to the tasks at hand.

The team’s approach combines state-of-the-art computer vision and large language models to create connections among images and semantic text. By leveraging the Information Bottleneck concept from information theory, the researchers compress image segments to identify those that are most semantically relevant to a given task. In real-world environments, Clio has demonstrated the ability to segment scenes and identify target objects based on natural language tasks. Running Clio in real-time on Boston Dynamics’ Spot quadruped robot, the researchers were able to generate maps showing only the target objects, allowing the robot to approach and complete the given tasks efficiently.

Moving forward, the team plans to adapt Clio to handle higher-level tasks and build upon recent advances in photorealistic visual scene representations. They aim to achieve a more human-level understanding of how to accomplish complex tasks, such as finding survivors in search and rescue missions. This research was supported by various organizations, including the U.S. National Science Foundation, the Swiss National Science Foundation, MIT Lincoln Laboratory, the U.S. Office of Naval Research, and the U.S. Army Research Lab Distributed and Collaborative Intelligent Systems and Technology Collaborative Research Alliance. The team’s results were detailed in a study published in the Robotics and Automation Letters journal.